Recently, Chris Rice, VP of AT&T Labs, presented ECOMP – the engine behind our software-centric network. And a key component in ECOMP is DCAE, which stands for Data Collection, Analytics and Events. DCAE brings the power of Big Data to SDN. It is responsible for collecting, managing, storing and analyzing data for an ecosystem of control loop automation systems and network and cloud services.

Imagine a world where everything is data driven. Your network is resilient, self-healing and self-learning. You can create, remove and instantly expand smart virtual functions – southbound network devices (i.e. firewalls, routers and switches) or northbound cloud services (customer care, ambient video, virtual reality, internet of things). This is possible with the power of ECOMP and DCAE.

DCAE’s Four Major Components

- Analytics Framework: A data development and processing platform with a catalog of micro-services – stand-alone, policy-enabled, elementary analytics functions that support structured and unstructured data processing.

- Collection Framework: A set of streaming and batch collectors that support virtualized network, device and infrastructure data.

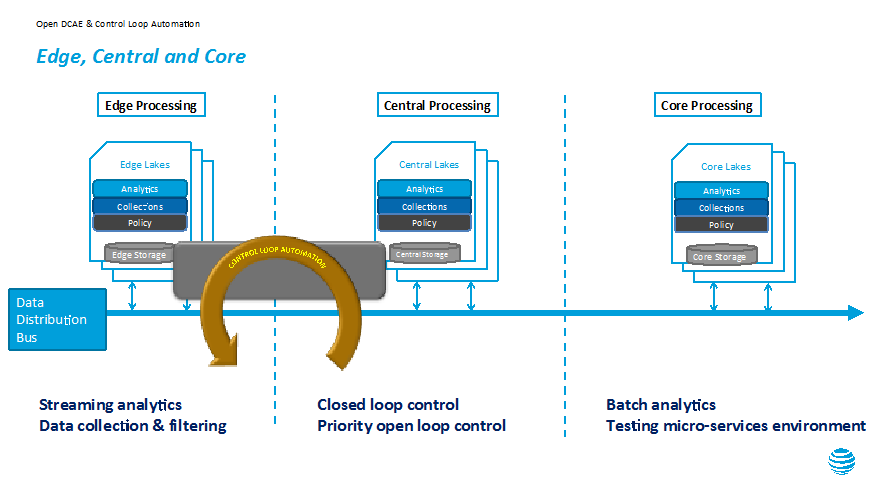

- Data Distribution Bus: ECOMP’s “blood stream.” It enables different components within DCAE and ECOMP to publish and subscribe to data as well as move data from the edge of the network downstream for processing.

- Persistence Storage Framework: A data-storage platform for short term and long term consumption.

Besides these four components, DCAE includes an orchestrator and a set of controllers that manage the data bus and micro-services and interprets different service designs and workflows.

DCAE is commonly referred to as “Open DCAE” and adopts an open source philosophy. It fosters a data marketplace and micro-services of collectors and analytics functions. With this approach, we can create a secure ecosystem where developers, third-party providers, data scientists and engineers can contribute to the platform. The platform also supports analytics, collections, policy enablement, compute and storage at the edge, central (where there are regional cloud zones) and core (where batch-mode processing is enabled) of the network. So, we can optimize compute, data distribution and storage resources to achieve desirable scale, performance, reliability and cost efficiencies.

Open DCAE enables a new generation of intelligent services that can run faster and smarter than before. By capturing data from the edge of our network, we can analyze data securely and efficiently to identify and eliminate potential security threats. By taking data from virtual machines supporting virtual firewalls and routers, we can also apply machine learning and advanced analytics to perform closed-loop automation (the automatic identification and resolution of failures). Those actions may include shifting traffic to new virtual machines or rebooting those machines during off-peak times.

However, there are some key challenges with Open DCAE development.

- Scalability and movement in processing and management of heterogeneous and large-scale data, from structured to unstructured, and messages to events and files.

- Development, certification and automated onboarding of micro-services. These functions are typically developed through different environments, teams, languages and styles, which make it tricky for automated onboarding and certification.

- Optimization and orchestration of services by composing collectors and analytics micro-services at the edge, central or core of the network to attain efficiency, cost effectiveness, security and reliability.

Despite those challenges, we still surpassed our 2015 goal to virtualize 5% of our network with 5.7%. And, we plan to accelerate to 30% in 2016 with ECOMP at the center of our software-centric network.

Read more about ECOMP and send us your thoughts.

Mazin Gilbert – Assistant Vice President, Intelligent Systems and Platform Research, AT&T Labs

– See more at: http://about.att.com/innovationblog/dcae#sthash.m7AYGqQu.dpuf

PR Archives: Latest, By Company, By Date